From "Feature Maze" to AI Value: A Case Study in Getting it Right

A real-world case study examining why bolt-on AI solutions fail and how to build AI products that actually create value using a structured, holistic approach.

We've all seen it: A powerful product packed with features that users barely touch. This isn't just a product problem; it's a massive missed opportunity. The recent wave of Generative AI has many companies rushing to solve this problem, but as this case study shows, a "bolt-on" AI approach can often make things worse.

Here is a look at a real-world scenario—the problem, the failed decision, and the right way to build AI products that actually create value.

1. The Issue: The "Maze of Features"

A financial analytics company had a platform that was a data goldmine. It was packed with deep insights, complex tables, and powerful visualizations. However, the user experience had become its own worst enemy.

As the team added more features to meet individual requests, the dashboard turned into a "maze of features." Analytics revealed a sobering statistic: users were only tapping into 10% to 20% of the platform's capabilities. The product was "screaming" for a smarter, simpler way to surface value.

2. The Decision: Reacting to the Hype

When the Generative AI hype wave hit, leadership saw it as a "game changer." The mandate came down: "Use AI. Give them a chatbot."

The decision was impulsive. The team, excited but without a clear plan, decided to double down on a conversational feature. The idea was simple: a chat interface would allow users to ask questions in plain language, making the complex dashboard feel fluid and intuitive. It was a classic case of a "solution looking for a problem," driven by the fear of missing out.

3. Why it was a Bad Decision (The Reality Check)

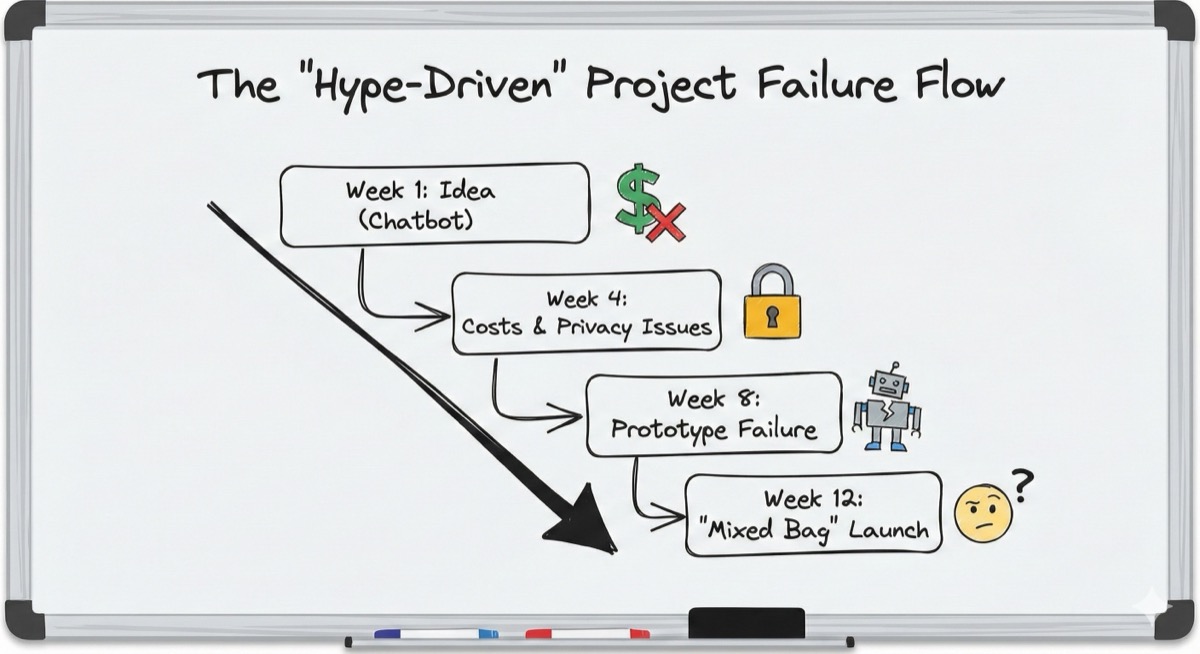

The project quickly turned into a downward spiral. Because the team was building AI for the sake of AI, they encountered a series of predictable failures.

* Data Misalignment: The training data was compiled by engineers who had never spoken to a user. The model learned the wrong patterns and couldn't handle real-world, messy queries.

* Tech-First Focus: The team obsessed over using the latest, most expensive models instead of focusing on the user experience.

* UX Gaps: The simple chatbot interface was a blank slate that didn't guide users, leading to frustration when the AI couldn't answer their broad questions.

The result was a "mixed bag" launch that was late, over budget, and failed to solve the core problem of low utilization.

4. The Right Way: The AI System Blueprint

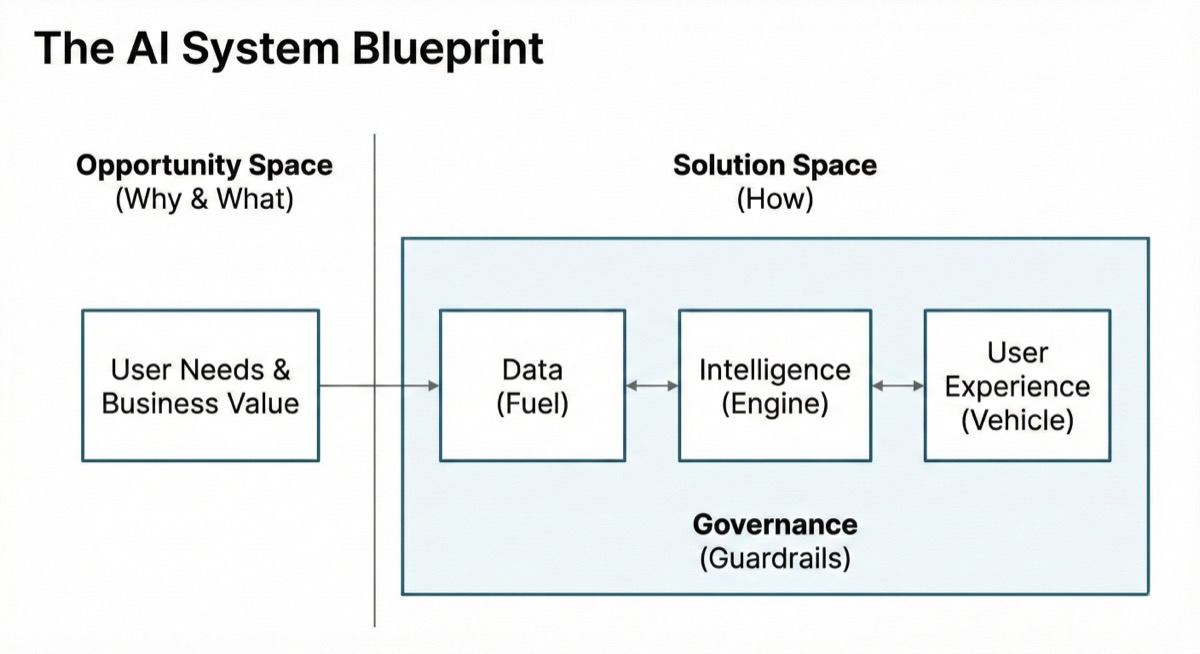

To avoid this fate, we must shift from "shiny object thinking" to a structured, holistic approach. Building AI that works requires a mental model that connects the business, the user, and the technology.

We call this the AI System Blueprint, which is divided into two main spaces:

* The Opportunity Space (Why & What): Start here. What is the actual customer need? What is the business value? If you can't answer this, don't build it.

* The Solution Space (How): This is where you build the system, which is a delicate balance of three components:

* Data (The Fuel): High-quality, relevant data is what powers the system.

* Intelligence (The Engine): The AI models themselves.

* User Experience (The Vehicle): The interface that delivers the value and manages the AI's inevitable errors.

All of this must be wrapped in solid Governance (Guardrails) to manage risk, privacy, and compliance.

The lesson is clear: don't just build an AI feature. Build a complete AI system that solves a real problem.

---

#ArtificialIntelligence #ProductManagement #GenerativeAI #UserExperience #LessonsLearned #FinTech

Written by

Ahmad Hussein

AI Systems Architect specializing in LLM orchestration, RAG systems, and scalable cloud platforms. Building secure AI solutions for Fintech, Robotics, and Legal-tech.